- Facebook15

- Threads

- Bluesky

- Total 15

Hannah Cox, James Fisher, and I have published a short piece in an outlet called eCampus News. The whole text is here, and I’ll paste the beginning here:

AI is difficult to understand, and its future is even harder to predict. Whenever we face complex and uncertain change, we need mental models to make preliminary sense of what is happening.

So far, many of the models that people are using for AI are metaphors, referring to things that we understand better, such as talking birds, the printing press, a monster, conventional corporations, or the Industrial Revolution. Such metaphors are really shorthand for elaborate models that incorporate factual assumptions, predictions, and value-judgments. No one can be sure which model is wisest, but we should be forming explicit models so that we can share them with other people, test them against new information, and revise them accordingly.

“Forming models” may not be exactly how a group of Tufts undergraduates understood their task when they chose to hold discussions of AI in education, but they certainly believed that they should form and exchange ideas about this topic. For an hour, these students considered the implications of using AI as a research and educational tool, academic dishonesty, big tech companies, attempts to regulate AI, and related issues. They allowed us to observe and record their discussion, and we derived a visual model from what they said.

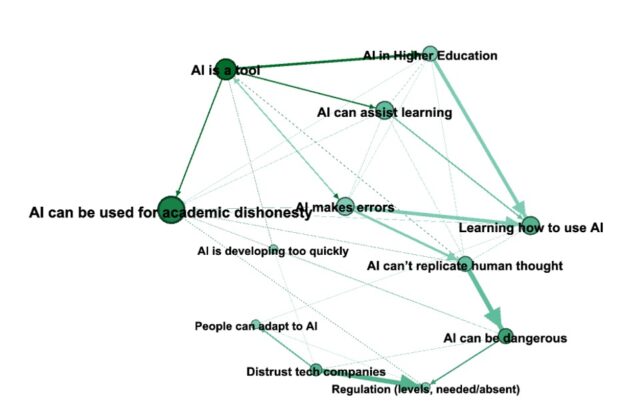

We present this model [see above] as a starting point for anyone else’s reflections on AI in education. The Tufts students are not necessarily representative of college students in general, nor are they exceptionally expert on AI. But they are thoughtful people active in higher education who can help others to enter a critical conversation.

Our method for deriving a diagram from their discussion is unusual and requires an explanation. In almost every comment that a student made, at least two ideas were linked together. For instance, one student said: “If not regulated correctly, AI tools might lead students to abuse the technology in dishonest ways.” We interpret that comment as a link between two ideas: lack of regulation and academic dishonesty. When the three of us analyzed their whole conversation, we found 32 such ideas and 175 connections among them.

The graphic shows the 12 ideas that were most commonly mentioned and linked to others. The size of each dot reflects the number of times each idea was linked to another. The direction of the arrow indicated which factor caused or explained another.

The rest of the published article explores the content and meaning of the diagram a bit.

I am interested in the methodology that we employed here, for two reasons.

First, it’s a form of qualitative research–drawing on Epistemic Network Analysis (ENA) and related methods. As such, it yields a representation of a body of text and a description of what the participants said.

Second, it’s a way for a group to co-create a shared framework for understanding any issue. The graphic doesn’t represent their agreement but rather a common space for disagreement and dialogue. As such, it resembles forms of participatory modeling (Voinov et al, 2018). These techniques can be practically useful for groups that discuss what to do.

Our method was not dramatically innovative, but we did something a bit novel by coding ideas as nodes and the relationships between pairs of ideas as links.

Source: Alexey Voinov et al, “Tools and methods in participatory modeling: Selecting the right tool for the job,” Environmental Modelling & Software, vol 19 (2018), pp. 232-255. See also: what I would advise students about ChatGPT; People are not Points in Space; different kinds of social models; social education as learning to improve models