- Facebook170

- Total 170

Jonathan Haidt’s Moral Foundations Theory is one of the most influential current approaches to moral psychology and it exemplifies certain assumptions that are pervasive in psychology more generally. I have been working lately with 18 friends and colleagues to “map” their moral views in a very different way, driven by different assumptions. As part of this small pilot project, I gave the 18 participants Haidt et al’s, Moral Foundations Questionnaire. Although my sample is small and non-representative, I am interested in the contrasting results that the two methods yield.

Haidt’s underlying assumptions are that people form judgments about moral issues, but these are often gut reactions. The reasons that people give for their judgments are post-hoc rationalizations (Haidt 2012, pp. 27-51; Swidler 2001, pp. 147-8; Thiele 2006). “Individuals are often unable to access the causes of their moral judgments” (Graham, Nosek, Haidt, Iyer, Koleva, & Ditto 2011, p. 368). Hence moral psychologists are most interested in unobserved mental phenomena that can explain our observable statements and actions.

Haidt et al ask their research subjects multiple-choice questions about moral topics. Once they have collected responses from many subjects, they use factor analysis to find latent variables that can explain the variance in the answers. (Latent variables have been “so useful … that they pervade … psychology and the social sciences” [Bollen, 2002, p. 606]). The variables that are thereby revealed are treated as real psychological phenomena, even though the research subjects may not be aware of them. Haidt and colleagues consider whether each factor names a psychological instinct or emotion that 1) would have value for evolving homo sapiens, so that our ancestors would have developed an inborn tendency to embrace it, and 2) are found in many cultures around the world. Now bearing names like “care” and “fairness,” these factors become candidates for moral “foundations.”

Because Haidt’s method generates a small number of factors, he concludes that people can be classified into large moral groups (such as American liberals and conservatives) whose shared premises determine their opinions about concrete matters like abortion and smoking. “Each matrix provides a complete, unified, and emotionally compelling worldview” (Haidt 2012, p. 107). In this respect, Haidt’s Moral Foundations theory bears a striking similarity to Rawls’ notion of a “comprehensive doctrine” that “organizes and characterizes recognized values to that they are compatible with each other and express an intelligible view of the world.”

In contrast, I have followed these steps:

- I recruited people I knew. These relationships, although various, probably influenced the results. I don’t entirely see that as a limitation.

- I asked each participant to answer three open-ended questions: “Please briefly state principles that you aspire to live by.” “Please briefly state truths about life or the world that you believe and that relate to your important choices in life.” “Please briefly state methods that you believe are important and valid for making moral or ethical decisions.”

- I interviewed them, one at time. I began by showing each respondent her own responses to the the survey, distributed randomly as dots on a plane. I asked them to link ideas that seemed closely related. When they made links, I asked them to explain the connections, which often (not always) took the form of reasons: “I believe this because of that.” As we talked, I encouraged them to add ideas that had come up during their explanations. I also gently asked whether some of their ideas implied others yet unstated; but I encouraged them to resist my suggestions, and often they did. The result was a network map for each participant with a mean of 20.7 ideas, almost all of which they had chosen to connect together, rather than leaving ideas isolated.

- We jointly moved the nodes of these networks around so that they clustered in meaningful ways. Often the clusters would be about topics like intimate relationships, views of social justice, or limitations and constraints.

- I put all their network maps on one plane and encouraged them to link to each others’ ideas if they saw connections. That process continues right now, but the total number of links proposed by my 18 participants has now reached 1,283.

- I have loosely classified their ideas under 30 headings (Autonomy, Authenticity/ integrity/purpose, Balance/tradeoffs, Everyone’s different but everyone contributes, Community, Context, Creativity/making meaning, Deliberative values, Difficulty of being good, Don’t hurt others, Emotion, Family, Fairness/equity, Flexibility, God, Intrinsic value of life, Justice, Life is limited, Maturity/experience, Modesty, No God, Optimism, Peace/stability, Rationality/critical thinking, Serve/help others, Relationships, Skepticism/human cognitive limitations, Striving, Tradition, Virtues). Note that some of these categories resemble Moral Foundations, but several do not. The ones that don’t tend to be more “meta”–about how to form moral opinions.

My assumptions are that people can say interesting and meaningful things in response to open-ended questions about moral philosophy; that much is lost if you try to categorize these ideas too quickly, because the subtleties matter; and that a person not only has separate beliefs but also explicit reasons that connect these beliefs into larger structures.

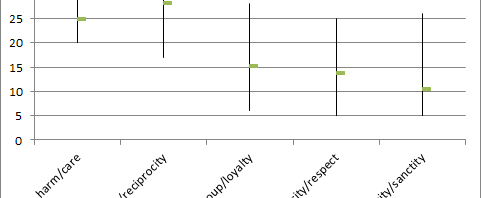

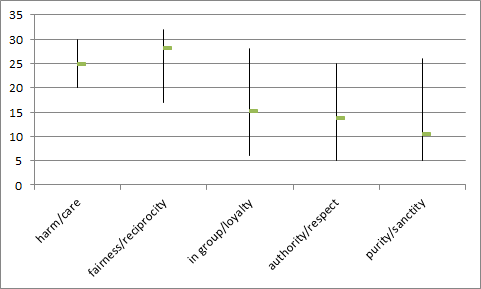

Since I also gave participants the Moral Foundations Questionnaire, I am able to say some things about the group from that perspective. This graph shows the group means and the range for their scores on the five Moral Foundations scales. For comparison, the average responses of politically moderate Americans are 20.2, 20.5, 16.0, 16.5, and 12.6. That means that my group is more concerned about harm/care and fairness/reciprocity than most Americans, and not far from average on other Foundations. But there is also a lot of diversity within the group. Two of my respondents scored 5 out of 35 on the purity scale, and two scored 20 or higher. The range was likewise from 6 to 28 on the in-group/loyalty scale.

You might think that this diversity would somehow be reflected in the respondents’ maps of their own explicit moral ideas and connections. But I see no particular relationships. For instance, one of the people who rated purity considerations as important–a self-described liberal Catholic–produced a map that clustered around virtues of moral curiosity and openness, friendship and love, and a central cluster about justice in institutions. She volunteered no thoughts about purity at all.

This respondent scored 20 on the purity scale. A different person (self described as an atheist liberal) scored 9 on that scale. But they chose to connect their respective networks through shared ideas about humility, deliberation, and justice.

The whole group did not divide into clusters with distinct worldviews but overlapped a great deal. To preserve privacy, I show an intentionally tiny picture of the current group’s map that reveals its general shape. There are no signs of separate blocs, even though respondents did vary a lot on some of the “Foundations” scales.

A single-word node that appears in five different people’s networks is “humility.” It also ranks fourth out 375 ideas in closeness and betweenness centrality (two different measures of importance in a network). It is an example of a unifying idea for this group.

Many of the ideas that people proposed have to do with deliberative values: interacting with other people, learning from them, forming relationships, and trying to improve yourself in relation to others. Those are not really options on the Moral Foundations Questionnaire. They are important virtues if we hold explicit moral ideas and reasons and can improve them. They are not important virtues, however, if we are driven by unrecognized latent factors.

One way to compare the two methods would be to ask which one is better able to predict human behavior. That is an empirical question, but a complex one because many different kinds of behavior might be treated as outcomes. In any case, it’s not the only way to compare the two methods. They also have different purposes. Moral Foundations is descriptive and perhaps diagnostic–helping us to understand why we disagree. The method that I am developing is more therapeutic, in the original sense: designed to help us to reflect on our own ideas with other people we know, so that we can improve.

[References: Bollen, Kenneth A. 2002. Latent Variables in Psychology and the Social Sciences. Annual Review of Psychology, vol. 53, 605-634; Graham, Jesse, Nosek, Brian A., Haidt, Jonathan, Iyer, Ravi, Koleva. Spassena, & Ditto, Peter H. 2011. Mapping the Moral Domain. Journal of Personality and Social Psychology, 101:2; Haidt, Jonathan. 2012. The Righteous Mind: Why Good People Are Divided by Politics and Religion. New York: Vintage; Swidler, Ann. 2001. Talk of Love: How Culture Matters. Chicago: University of Chicago Press; Thiele, Leslie Paul. 2006. The Heart of Judgment: Practical Wisdom, Neuroscience, and Narrative Cambridge University Press.]